Public health events, particularly large-scale infectious disease outbreaks, have increasingly drawn attention to their impacts on the global economy and financial markets. The COVID-19 pandemic, which erupted in 2020, stands as a significant recent public health event that not only caused widespread infections and fatalities in a short period but also triggered a global economic recession and turmoil in financial markets (1). The pandemic has had far-reaching effects on various aspects of the global socio-economic landscape, exposing the vulnerabilities of the global economic system. Market volatility significantly increased during the pandemic, reflecting not only investor uncertainty about future economic prospects but also revealing the market's heightened sensitivity to risk (2). Against this backdrop, the healthcare sector, due to its crucial role in combating the pandemic, became a focal point for market attention. During the stock market crash in the United States triggered by the pandemic, stocks related to the healthcare industry performed exceptionally well (3), indicating that the volatility of healthcare stock indices reflects not only changes in industry supply and demand but also serves as a significant indicator of market sentiment and risk appetite. As the frontline in responding to the pandemic, the volatility of healthcare stock indices was particularly pronounced during this period. Prior to the pandemic, the operation of public health systems was relatively stable, with healthcare resource allocation and utilization rates being consistent; the healthcare sector was viewed as a relatively stable and defensive investment area. However, the outbreak and spread of the virus disrupted this balance and stability, placing immense pressure on public health systems, straining healthcare resources, and impeding socio-economic activities. During the pandemic, healthcare stock indices exhibited significant volatility, influenced by the dynamic developments of the pandemic, policy measures, and public sentiment (4). As the pandemic gradually came under control, healthcare stock indices displayed different patterns of recovery and adjustment in the later stages (5). Post-pandemic, with the gradual relaxation of control measures and the promotion of vaccination, public health systems began to recover, although long-term impacts remain. In such a complex and fluctuating market environment, accurately predicting the trends of healthcare stock indices is of great importance for investors and policymakers. Traditional forecasting methods often struggle to handle the complexity and non-linearity of market data (6). In recent years, advancements in machine learning and deep learning technologies, particularly the application of Bayesian Convolutional Neural Networks (Bayes-CNN), have provided new perspectives and methodologies for research in this field.

Bayesian Convolutional Neural Networks (Bayes-CNN), which combine Bayesian statistics with convolutional neural network technology, have emerged as a promising method in this context (7). Compared to traditional GARCH models and other machine learning models, deep learning models such as CNNs demonstrate higher predictive accuracy and stability when handling financial time series data (8, 9). By incorporating Bayesian methods, Bayes-CNN effectively addresses uncertainty, non-linear relationships, and noise during the modeling process (10). Specifically, Bayes-CNN can adaptively adjust based on historical data and prior knowledge when processing different datasets, thereby enhancing the model's predictive accuracy (11). In contrast to traditional neural networks, Bayes-CNN exhibits greater robustness and generalization capabilities when facing complex and variable uncertainty environments (12). This makes Bayes-CNN a powerful tool for predicting the trends of healthcare stock indices before, during, and after the pandemic. The aim of this study is to conduct a systematic prediction of healthcare stock indices across the pre-pandemic, pandemic, and post-pandemic periods using the Bayes-CNN model. First, this paper will explore the specific impacts of the pandemic on healthcare stock indices and analyze the patterns of their volatility. Next, we will construct the Bayes-CNN model and train it using historical data, evaluating its predictive performance across different time periods. Additionally, to validate the effectiveness and reliability of our conclusions, we will incorporate European data and the GARCH model into the empirical analysis. Finally, this study will discuss the impact of the pandemic on healthcare stock indices based on the predictive results, providing valuable insights for related investment planning and policy formulation.

This study aims to investigate the impact of public health events on the volatility of the CSI Medical Service Index and to conduct a comparative analysis of volatility predictions across three periods: before the pandemic, during the pandemic, and after the pandemic. The research seeks to reveal the specific effects of the pandemic on the volatility of healthcare stock indices. This study holds significant theoretical and practical implications. Theoretically, by comparing the volatility of healthcare stock indices across different time periods, this research enriches the theoretical understanding of the relationship between public health events and financial market volatility. In particular, the application of the deep learning Bayes-CNN model for volatility prediction offers a new method and perspective for predicting financial market volatility (13). Practically, the findings of this study provide valuable insights for investors regarding risk management during public health events, assisting them in making more informed decisions in uncertain market environments. Additionally, policymakers can leverage the findings to better understand the impact of the pandemic on the healthcare industry and financial markets, allowing them to develop more effective market regulation and intervention measures to maintain financial market stability.

The structure of this paper is as follows: the second section is a literature review that examines relevant research on public health events and financial market volatility, volatility forecasting models, and the Bayes-CNN model. The third section introduces the data and methodology of the study, including data sources, model construction, research design, and evaluation metrics. The fourth section presents the empirical analysis, showcasing the optimization results of the models and the predicted volatility across different periods, along with comparative and validity analyses. The fifth section concludes the paper by summarizing the main findings, discussing the limitations of the research, and suggesting directions for future studies.

2 Literature reviewThe impact of public health events on financial markets has gradually become a focal point of research in recent years. Baker et al. (2) demonstrated that the COVID-19 pandemic led to a sharp increase in global economic uncertainty and a significant rise in financial market volatility. Their study quantified the pandemic's impact on financial markets through the analysis of an economic uncertainty index, finding that stock market volatility increased markedly during the pandemic. In the early stages of the outbreak, investors, facing heightened uncertainty, generally shifted toward safe-haven assets, resulting in capital outflows from the stock market and declining prices. Al-Awadhi et al. (14) further analyzed the short-term effects of the pandemic on the Chinese stock market, revealing that the rapid spread and worsening situation of the pandemic led to a significant decline in stock prices across China. In this context, investor sentiment became more cautious, leading to decreased market liquidity and increased market volatility. Wagner and Ramelli (4) studied the pandemic's effects on corporate stock prices, finding that the pandemic increased systemic market risk. Due to supply chain disruptions, reduced demand, and production halts, many companies experienced substantial declines in stock prices in the early stages of the pandemic, resulting in severe market adjustments. However, certain sectors, such as technology and healthcare, performed relatively well due to increased demand and adaptability of their business models. Zhang et al. (1) provided a comprehensive analysis of financial markets, pointing out significant differences in the pandemic's impact across various financial markets. Notably, developed and emerging markets exhibited different responses to the pandemic's shocks. Developed countries, with relatively stable economic foundations, experienced faster recovery in financial markets, while emerging markets faced greater pressure and a slower recovery (15). Goodell (16) emphasized the long-term impacts of public health events on financial markets, noting that pandemics not only have short-term market shocks but may also lead to long-term structural economic changes. Financial markets must adapt to these changes and reassess risks and returns. Additionally, research indicates that public health events can also affect governments and the public. For example, public sector provision of insurance may crowd out private sector initiatives, the public's attitudes toward government may change due to the pandemic, and social trust can influence the financial system. Barro et al. (17) validated this viewpoint through historical data analysis of past public health events, indicating that pandemics similar to COVID-19 typically impact overall economic activity, leading to declines in stock prices and increases in volatility.

Under the influence of public health events, the performance of healthcare stock indices exhibits unique characteristics and patterns of variation. During the COVID-19 pandemic, healthcare stock indices demonstrated significant volatility; however, compared to other sectors, the healthcare industry overall displayed greater stability and growth potential. Due to its crucial role in public health events, the healthcare sector exhibited heightened volatility and trading activity during the pandemic. Mazur et al. (3) indicated that during the COVID-19 pandemic, the performance of the healthcare and technology sectors surpassed that of other industries, with a notable increase in investor demand for these sectors, highlighting the importance of studying the volatility of healthcare stock indices. In the early stages of the pandemic, healthcare stock indices may have experienced short-term declines influenced by market panic. However, as the pandemic spread and demand for healthcare surged, these indices quickly rebounded, displaying a strong upward trend. Al-Awadhi et al. (14) found that during the pandemic, stocks in the information technology and pharmaceutical manufacturing sectors significantly outperformed the market. Expectations for the healthcare sector likely shifted dramatically during the pandemic, and these changes were directly reflected in the performance of healthcare stock indices. As the pandemic progressed, the investment returns in the healthcare sector notably exceeded those of other industries, particularly for companies with core competencies in pandemic prevention and treatment. Pagano et al. (18), using the COVID-19 pandemic as an experiment, concluded that asset markets allocated time-varying prices for companies' disaster risk exposures, revealing how the market prices the resilience of different companies to disaster risks and how this pricing reflects shifts in investor perceptions of risk as disasters unfold. This research can provide insights into the price volatility of companies in the healthcare sector. Given its pivotal role in pandemic control, the healthcare sector attracted substantial capital inflows, becoming a significant choice for investors seeking safe havens and stable returns. Furthermore, Hunjra et al. (19) examined the impact of government health measures during COVID-19 on the volatility of capital markets in East Asian economies, finding that different health policy measures influenced investor behavior and resulted in stock market volatility. Gheorghe et al. (20) studied the relationship between national healthcare system performance and stock volatility during the COVID-19 pandemic, noting that the connection between these two variables was significantly stronger during the pandemic. Su et al. (21) explored the relationship between healthcare financial expenditures (FE) and the pharmaceutical sector stock index (SP), indicating that there exists both positive and negative correlations between FE and SP, which should be examined in conjunction with other events and market conditions. The healthcare stock indices studied in this paper are closely related to government health measures.

Traditional financial market forecasting methods often struggle with complex and nonlinear data. The GARCH model proposed by Bollerslev (22) effectively captures volatility clustering effects in financial time series by considering conditional heteroscedasticity. However, the GARCH model may have limitations when faced with complex nonlinear data. Gunnarsson et al. (23) highlighted that AI and machine learning (ML) methods show promising effectiveness in volatility forecasting, often providing results comparable to or better than those of econometric methods. Lu et al. (24) found that machine learning models outperformed traditional forecasting models in predicting oil futures volatility, indicating that machine learning can effectively handle non-linearities in data sequences and capture important information related to oil futures market volatility. Deep learning models, such as Convolutional Neural Networks (CNN), Recurrent Neural Networks (RNN), and Long Short-Term Memory networks (LSTM), may exhibit superior performance in processing high-dimensional, and non-linear data. Fischer and Krauss (25) investigated the predictive power of LSTM in financial markets, discovering that LSTM networks outperformed memoryless classification methods, such as Random Forest (RF), Deep Neural Networks (DNN), and Logistic Regression (LOG), demonstrating significant advantages in capturing complex patterns in time series data. Bayesian Convolutional Neural Networks (Bayes-CNN) offer a new approach to effectively handle uncertainty and non-linear relationships in volatility forecasting by combining Bayesian statistics with convolutional neural networks. This method captures complex data patterns while quantifying prediction uncertainty. Hernández-Lobato and Adams (26) utilized probabilistic backpropagation in Bayesian neural networks and found it to have higher robustness and predictive accuracy when handling high-dimensional data, along with faster computational speed. Gal and Ghahramani (11) proposed that using dropout as a Bayesian approximation can effectively quantify uncertainty in neural networks without sacrificing computational complexity or testing accuracy. Wei and Chen (27) employed Bayesian Convolutional Neural Networks to quantify uncertainty in solving the inverse scattering problem (ISP), demonstrating the excellent performance of deep learning schemes (DLS). Feng et al. (28) applied Bayes-CNN to predict seismic phase classification, noting that the model can assess uncertainty in predictions and outperforms standard neural networks. These methods can be transferred to financial market predictions to showcase their potential, particularly in addressing high volatility and uncertainty in the market.

Despite the extensive research on the impact of public health events on financial markets and the application of various volatility prediction models, the use of the Bayes-CNN model in economic analysis remains limited, particularly in the context of predicting the volatility of the China CSI Medical Service Index. Specifically, studies employing the Bayes-CNN model to conduct comparative analyses of healthcare stock index volatility across the pre-pandemic, during-pandemic, and post-pandemic phases are still underdeveloped. This paper enriches the literature in this field by systematically analyzing the impact of the pandemic on the volatility of the China CSI Medical Service Index using the advanced Bayes-CNN model, and it provides a comprehensive understanding of the changes in volatility across different phases.

3 Data and methodology 3.1 DataThis study focuses on the perspective of public health events and selects the CSI (China Securities Index) Medical Service Index as the core indicator of the research, referred to as “CSI Medical Service” with index code 399989. The index is based on a reference date of December 31, 2004, with a base value of 1,000 points. The CSI Medical Service Index includes securities from listed companies in the medical and healthcare industry, encompassing sectors such as medical devices, medical services, and healthcare information technology, thereby comprehensively reflecting the overall performance of healthcare-related companies in China. The CSI Medical Service Index aims to provide investors with an effective market benchmark by integrating the market performance of various sub-sectors within the healthcare industry. The sample stocks of the index cover multiple sub-industries, including medical devices, pharmaceuticals, biotechnology, medical services, and healthcare information technology, effectively showcasing the diversity and overall development trends of the healthcare sector. Specifically, the selection criteria for the CSI Medical Service Index include that companies must be listed on either the main board or the ChiNext board of the Chinese securities market, with their primary business related to the aforementioned healthcare fields. The sample stocks are adjusted annually to ensure the index's representativeness and forward-looking nature. This index not only aids investors in understanding market dynamics within the healthcare sector but also serves as a reference for policymakers regarding industry development. The data involved in this study spans from December 31, 2004, to July 9, 2024, with a daily frequency. Volatility is calculated following the methodology of Zhou and Zhou (29). The specific calculation formula is as follows:

volt=(Hight-Lowt)/Avgt (1)In Equation (1), volt represents the intraday volatility of the CSI Medical Service Index on day t. Hight denotes the highest price of the CSI Medical Service Index on day t, Lowt denotes the lowest price of the CSI Medical Service Index on day t, Avgt denotes the average price of the CSI Medical Service Index on day t. The calculation reflects the maximum volatility of the CSI Medical Service Index on day t. Higher volatility indicates more severe fluctuations in financial asset prices, leading to greater uncertainty in asset returns. Conversely, lower volatility signifies more moderate price changes. In this study, volatility is primarily used as a measure of risk. By examining the volatility of the CSI Medical Service Index, we aim to gain a deeper understanding of the impact of public health events on the healthcare industry and to provide valuable insights for investors and policymakers.

3.2 Research methodology 3.2.1 Convolutional neural networksConvolutional Neural Networks (CNNs) are commonly used deep learning architectures for processing image and time series data. The main components of a CNN include convolutional layers, pooling layers, and fully connected layers. The convolutional layer is the core component of a CNN, responsible for extracting local features from the input data through convolution operations. Convolutional layers utilize multiple convolutional kernels (filters) that slide over the input data to generate a set of feature maps. Each convolutional kernel is capable of detecting different features, such as edges, textures, or other patterns. The equation is as follows:

(f*g)(i,j)=∑m∑nf(m,n)·g(i-m,j-n) (2)In this equation, f represents the input image, g denotes the convolutional kernel, and i and j are the coordinates of the output feature map. Convolutional layers typically employ non-linear activation functions to introduce non-linearity, further enhancing the model's expressive power (30).

Pooling layers are used to down sample feature maps, reducing computational complexity and the number of parameters while preserving important features. Common pooling methods include max pooling and average pooling. The formula for max pooling is as follow:

y(i,j)=max(m,n)∈poolingregionx(i+m,j+n) (3)In this context, x represents the input feature map, y denotes the pooled feature map, and m and n are indices representing local regions (the convolutional kernel or pooling area) used to traverse the pixels or feature values within the input image. The pooling layer compresses the feature map by selecting the maximum or average value from the local region, which helps to retain features to some extent while reducing the dimensionality of the data (31). The fully connected layer integrates features from the convolutional and pooling layers and maps them to the output layer. Each node in the fully connected layer is connected to all nodes in the previous layer, similar to traditional artificial neural networks (ANNs). The formula for the fully connected layer is as follows:

In this formula, W represents the weight matrix, x is the input vector, b is the bias vector, f is the activation function, and y denotes the output of the fully connected layer. The fully connected layer is typically located at the end of the network, mapping high-level abstract features to the final classification or regression results (32).

3.2.2 Bayesian inferenceBayesian Inference introduces Bayesian statistical methods to probabilistically handle the parameters of CNN models, thereby capturing uncertainties in the data. This process mainly involves Variational Inference and Markov Chain Monte Carlo (MCMC) methods. The goal of Bayesian inference is to update the posterior distribution of model parameters by combining prior distributions with observed data (33). Bayes' theorem provides the mathematical foundation for this process, as expressed in the following formula:

P(θ|X)=P(X|θ)P(θ)P(X) (5)In this context, θ represents the model parameters, X denotes the observed data, P(θ|X) is the posterior distribution, P(X|θ) is the likelihood function, P(θ) is the prior distribution, and P(X) is the marginal likelihood. Bayesian inference reflects the uncertainty in the data through the posterior distribution and provides probabilistic estimates of the model parameters.

Bayesian inference involves Variational Inference, which is a deterministic inference method that approximates complex posterior distributions with a parameterized simpler distribution. This is achieved by optimizing the Variational Lower Bound (ELBO) to approximate the true posterior distribution. The goal is to minimize the Kullback-Leibler (KL) divergence between the true posterior distribution and the approximate distribution. The formula is as follows:

KL(q(θ)∣∣p(θ∣X))=∫q(θ)logq(θ)p(θ∣X)dθ (6)Here, q(θ) represents the approximate distribution, and p(θ∣X) denotes the posterior distribution. By optimizing the Variational Lower Bound, Variational Inference can effectively approximate the posterior distribution while maintaining high computational efficiency in high-dimensional spaces.

Markov Chain Monte Carlo (MCMC) methods are a class of stochastic sampling techniques used to sample from posterior distributions by constructing a Markov chain. Common MCMC methods include the Metropolis-Hastings algorithm and Hamiltonian Monte Carlo (HMC) algorithm. The principle of the MCMC method is as follows:

π(θ)=∑i=1Nδ(θ-θi)/N (7)In this context, π(θ) represents the sample set from the posterior distribution, while θi denotes the samples drawn from the posterior distribution. MCMC methods can generate samples that approximate the posterior distribution, making them suitable for inference in high-dimensional complex distributions. The application of Bayesian inference in predicting volatility in financial markets involves quantifying the uncertainty of model parameters, thereby enhancing the reliability and robustness of predictions. This is particularly important for risk management and investment decision-making, as it helps investors better understand market risks and make more informed decisions.

3.2.3 Bayesian Convolutional Neural NetworksBayesian Convolutional Neural Networks (Bayes-CNN or BCNN) enhance the ability of traditional Convolutional Neural Networks (CNN) to handle uncertainty and improve predictive performance by integrating Bayesian inference methods. Traditional CNNs often overlook the uncertainties present in financial time series data, such as volatility predictions, resulting in insufficient robustness and generalization capabilities. In contrast, Bayes-CNN effectively captures the uncertainty in the data by treating model parameters probabilistically, thereby enhancing both the accuracy and stability of predictions.

The structure of the Bayes-CNN model is similar to that of traditional CNNs, but there are significant differences in parameter handling and inference methods. The model structure of Bayes-CNN includes the following key components: Convolutional Layers: These layers are used to extract local features from the input data. In volatility prediction, convolutional layers can effectively capture local patterns and trends in time series data. Pooling Layers: These layers are utilized to reduce the dimensionality of feature maps, thereby decreasing computational complexity while preserving important features. Fully Connected Layers: These layers combine and map the extracted features to generate the final prediction results (34). In Bayes-CNN, the weight parameters of the fully connected layers are treated as random variables that follow a specific probability distribution. Bayesian Inference Module: This module is the key differentiator between Bayes-CNN and traditional CNNs. The Bayesian inference module estimates the posterior distribution of the model parameters using methods such as Variational Inference (VI) or Markov Chain Monte Carlo (MCMC).

In volatility prediction, the Bayes-CNN model can be applied through the following steps: Data Preprocessing: Financial time series data are standardized and normalized, and necessary data augmentation is performed. These steps help to reduce data noise and improve the effectiveness of model training. Model Training: Historical volatility data are used to train the Bayes-CNN model. During the training process, it is essential to optimize the Bayesian inference module to estimate the posterior distribution of the model parameters. Variational inference methods typically approximate the posterior distribution by optimizing the Variational Lower Bound, while MCMC methods sample from the posterior distribution by constructing a Markov chain. Continuous adjustments are made to the model's hyperparameters. Model Prediction: After obtaining the optimal combination of hyperparameters, the trained Bayes-CNN model is utilized to predict future volatility. Through these steps, the Bayes-CNN model effectively leverages the characteristics of financial time series data, enhancing the accuracy and reliability of volatility predictions, and providing robust support for investors and risk managers.

3.3 Evaluation metricsThis study divides the volatility of the China CSI Medical Service Index into three time periods for prediction analysis and comparison. The first period is pre-pandemic, spanning from December 31, 2004, to December 31, 2019. The second period covers the pandemic, from January 1, 2020, to December 31, 2022. The third period is post-pandemic, extending from January 1, 2023, to July 9, 2024. In the Bayesian Convolutional Neural Network (Bayes-CNN) model, key evaluation metrics such as Mean Absolute Error (MAE), Mean Squared Error (MSE), and Mean Absolute Percentage Error (MAPE) are employed to assess the model's predictive performance.

Mean Absolute Error (MAE) is the average of the absolute errors between the predicted values and the actual values. The formula is as follows:

MAE=1n∑i=1n|yi−y^i| (8)where: n is the number of samples, yi is the actual value of the i-th sample, ŷi is the predicted value of the i-th sample. MAE measures the average deviation of predicted values from the actual values. Its unit is consistent with that of the original data, making it easy to interpret. MAE is robust to outliers as it considers the absolute value of errors rather than their squares, which means it is less sensitive to extreme values.

The Mean Squared Error (MSE) is the average of the squared differences between predicted values and actual values. The formula is given by:

MSE=1n∑i=1n(yi−y^i)2 (9)where: n is the number of samples, yi is the actual value of the i-th sample, ŷi is the predicted value of the i-th sample. Due to the squaring of the differences, MSE is more sensitive to large errors. This makes MSE useful for penalizing significant deviations more heavily, thus enforcing a stricter reduction of large errors during model training. MSE is often used as an optimization objective function in many machine learning models, especially in regression problems.

The Mean Absolute Percentage Error (MAPE) is the average of the absolute percentage errors between predicted values and actual values. The formula is given by:

MAPE=1n∑i=1n|yi−y^iyi|×100% (10)In the formula, n represents the number of samples, yi denotes the actual value of the i-th sample, and ŷi represents the predicted value of the i-th sample. MAPE is a percentage metric, which is applicable to data with different scales, making it convenient for comparing the performance of different datasets or models. MAPE provides a ratio of prediction error relative to the actual values, making it easy to understand and interpret, and thus offering intuitive insights.

In the Bayes-CNN model, these metrics are used to evaluate the model's predictive performance, ensuring its accuracy and stability. MAE: Measures the average prediction error of the model, helping to understand the overall performance of the model on the data. MSE: Penalizes large errors, aiding in the optimization of the model to reduce the occurrence of significant deviations. MAPE: Provides the average level of relative error, making it suitable for scenarios where comparisons between different datasets are necessary. By employing these evaluation metrics, one can comprehensively assess the performance of the Bayes-CNN model in prediction tasks, and make adjustments and optimizations to enhance its predictive accuracy and reliability.

4 Experiments and results 4.1 Data preprocessingIn the volatility prediction of financial time series data, the Bayes-CNN model combines the efficient feature extraction capabilities of CNNs with the robust uncertainty handling of Bayesian inference, enabling it to provide precise predictions and uncertainty assessments. In this study, we first preprocess the data. Financial time series data are standardized and normalized to ensure the stability and consistency of the input data. We employ min-max normalization to scale the original time series data to the range [0, 1], as shown in the following equation:

xi,=xi-xminxmax-xmin (11)The normalized data point, denoted as xi,, is obtained from the original data point xi, with xmin and xmax representing the minimum and maximum values in the dataset, respectively. This normalization process mitigates the effects of differing scales, allowing for comparisons and processing of features on a uniform scale. During the model training process, historical volatility data are used to train the Bayes-CNN model. The parameters of the CNN component are optimized, and the Bayesian inference module is refined to estimate the posterior distribution of the model parameters. The trained Bayes-CNN model is then utilized to predict future volatility.

4.2 Optimization results at different periodsIn the Bayes-CNN model, the Bayesian optimization method is a technique used for hyperparameter tuning that leverages Bayesian inference to predict the optimal values of model parameters. The selection of hyperparameters has a significant impact on model performance in deep learning, and Bayesian optimization provides an efficient approach to search for the best combination of these hyperparameters. The specific steps are as follows: (1) Define the Objective Function: First, define an objective function, typically the validation error or loss function of the model, that you aim to minimize in order to find the optimal hyperparameters. (2) Select Prior Distributions: Choose prior distributions for the hyperparameters. These distributions express initial beliefs about the hyperparameters; for example, they may be uniformly distributed over a specific range. (3) Initial Sampling: Perform initial sampling in the hyperparameter space, evaluate the objective function, and use these points as training data to construct a probabilistic model. (4) Construct the Probabilistic Model: Use the data from the initial sampling to build a probabilistic model of the hyperparameters. This is typically a Gaussian Process, which captures the relationship between the hyperparameters and the objective function. (5) Obtain the Posterior Distribution: Update the prior distribution using Bayes' theorem to obtain the posterior distribution. The posterior distribution reflects beliefs about the hyperparameters based on the observed data. (6) Determine the Optimization Point: Use the probabilistic model to identify the next sampling point that provides the most information. This is often achieved by calculating the acquisition function of the probabilistic model, such as Expected Improvement (EI) or Gaussian Process Upper Confidence Bound (GP-UCB). (7) Iterative Optimization: Evaluate the objective function at the selected optimization point and update the probabilistic model with the new data point. Repeat steps 5 and 6 until a stopping condition is met, such as reaching a predetermined number of iterations or no significant improvement in the objective function. (8) Select the Best Hyperparameters: After all iterations are complete, choose the combination of hyperparameters that minimizes the objective function.

Bayesian optimization is a highly effective method for hyperparameter tuning, particularly for expensive evaluation functions, as it can intelligently select sampling points, thereby reducing the number of required evaluations. Additionally, since the Bayes-CNN model itself possesses the capability of uncertainty estimation, the combination of Bayesian optimization can further enhance the model's generalization ability and robustness.

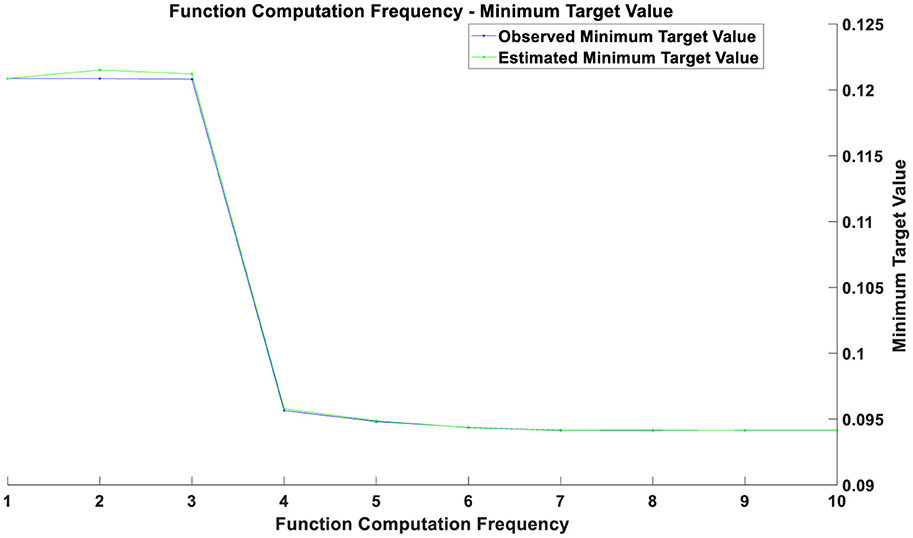

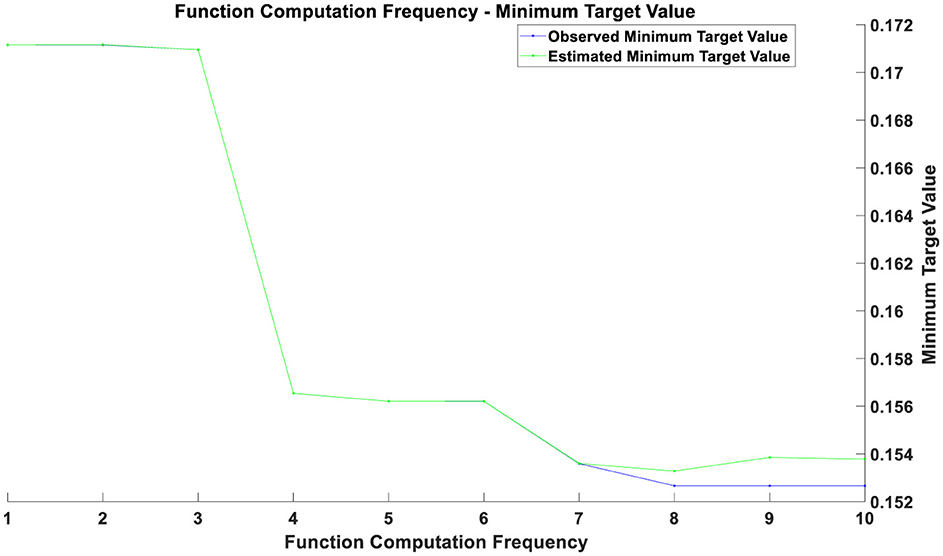

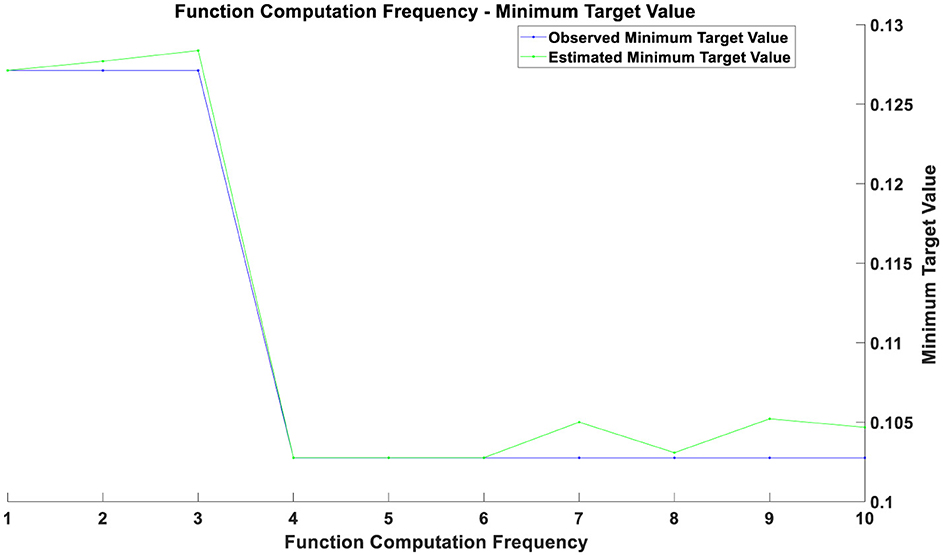

Overall, the optimization process consists of two main components. The first component involves optimizing the parameters of the CNN: local features of time series data are extracted through convolutional layers, and the dimensionality of the feature maps is reduced using pooling layers. The Backpropagation algorithm and Gradient Descent optimization are used to adjust the model parameters, minimizing the error on the training data. The second component focuses on the optimization of the Bayesian inference module: this study employs the Markov Chain Monte Carlo (MCMC) method to estimate the posterior distribution of the model parameters. The MCMC method involves constructing a Markov chain to sample from the posterior distribution. The goal of this optimization process is to find the optimal parameter distribution that enhances the model's predictive accuracy and stability when faced with new data. The optimization results before the COVID-19 pandemic are illustrated in Figure 1, those during the pandemic in Figure 2, and post-pandemic optimization in Figure 3. The vertical axis represents the value of the objective function, which is typically minimized in optimization problems. The horizontal axis indicates the number of function evaluations, reflecting the number of iterations or evaluations conducted during the optimization process.

Figure 1. Optimization chart before the pandemic.

Figure 2. Optimization chart during the pandemic.

Figure 3. Optimization chart after the pandemic.

If two curves are close to each other or coincide with each other with a relatively small number of function evaluations, this indicates that the optimization algorithm is efficient and can quickly find solutions near the optimal one. Conversely, if the curves only begin to approach each other after a higher number of function evaluations, it may suggest that the optimization process requires more iterations to converge. During the initial phase (with a smaller number of function evaluations), a rapid decrease in the objective value indicates that the optimization process is quickly approaching the optimal solution. As the number of function evaluations increases, the rate of decrease in the objective value gradually slows down. This may be due to diminishing returns in improvement as the solution approaches the optimal point. The gap between the estimated minimum objective value and the observed minimum objective value decreases over time, reflecting an improvement in the accuracy of the estimated model. From the figures, it can be observed that as the number of function evaluations increases, the observed minimum objective value stabilizes, which may indicate that the optimal solution or convergence point has been approached. The estimated minimum objective value curve closely aligns with the observed minimum objective value curve, suggesting that the estimated model is consistent with the actual observed values.

The optimization results prior to the pandemic, as shown in Figure 1, indicate that the optimization process was continually advancing, with the objective value progressively decreasing. This suggests that the optimization algorithm performed well in searching for the optimal solution. In the initial phase (approximately the first 2–3 training epochs), both training and validation losses were relatively high. This is because the model was just beginning to learn the data features and had not yet been optimized to its best state. Between the 3rd and 4th training epochs, there was a significant decrease in both training and validation losses, indicating that the model rapidly learned the important features of the data during this period, and the optimization process was highly effective at this stage. From the 4th training epoch onward, the training and validation losses stabilized at a low level, suggesting that the model parameters had essentially converged and reached a relatively stable state. Throughout the training process, the training and validation losses were very close and almost coincided, indicating that the model performed consistently across the training and validation sets, with no significant overfitting or under fitting observed. The optimization graph demonstrates that the model converged quickly in the early training stages and maintained stability in the later stages, reflecting good optimization performance on the pre-pandemic data. The significant decrease in the objective value likely indicates that the optimization problem was approaching or had reached a relatively ideal solution.

The optimization results during the pandemic, as depicted in Figure 2, reveal that in the initial phase (approximately the first 3 training epochs), both training and validation losses were high. This is because the model had not yet effectively learned the data features at this early stage. Between the 3rd and 5th training epochs, there was a rapid decrease in both training and validation losses, indicating that the model learned a substantial amount of

Comments (0)